7 abril, 2025

0 comentarios

1 category

Para la instalación de Hadoop se han seguido las siguientes instrucciones:

- Descargamos el software de Hadoop:

$ cd /home/bigdata

$ wget https://archive.apache.org/dist/hadoop/common/hadoop-3.4.1/hadoop-3.4.1.tar.gz

$ mv hadoop-3.4.1 hadoop- Customizamos Hadoop:

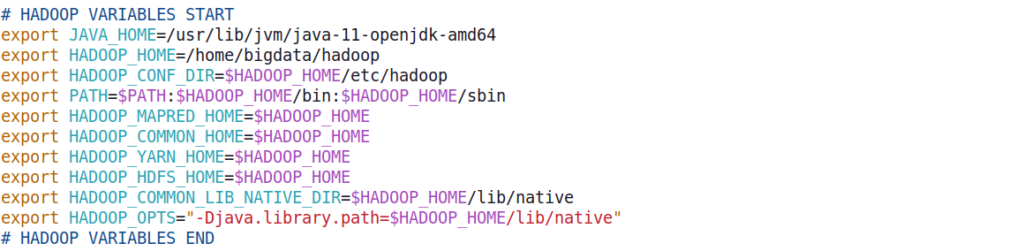

- Variables de entorno.

Se añade:

# HADOOP VARIABLES START

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64

export HADOOP_HOME=/home/bigdata/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

# HADOOP VARIABLES END

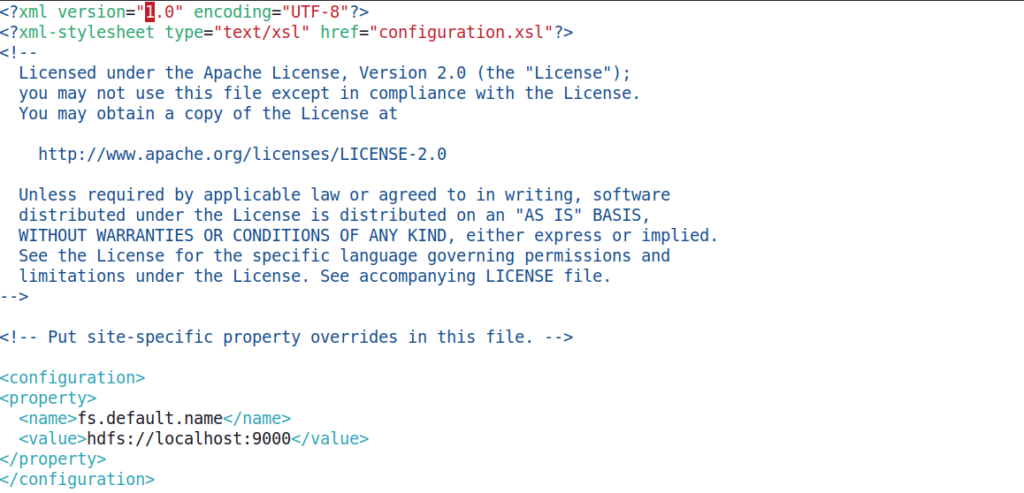

- /home/bigdata/hadoop/etc/hadoop/core-site.xml

Se añade:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

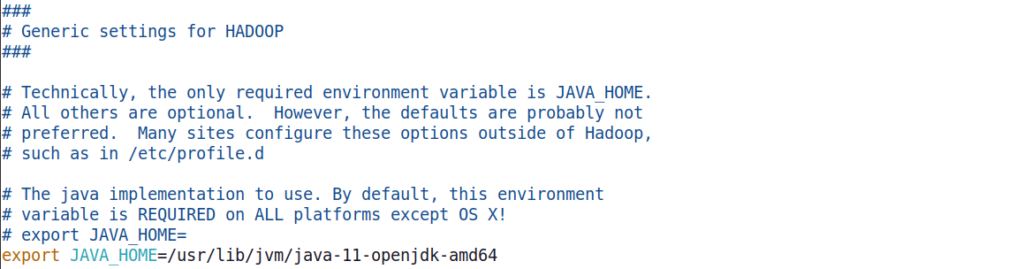

- /home/bigdata/hadoop/etc/hadoop/hadoop-env.sh

Se añade:

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64

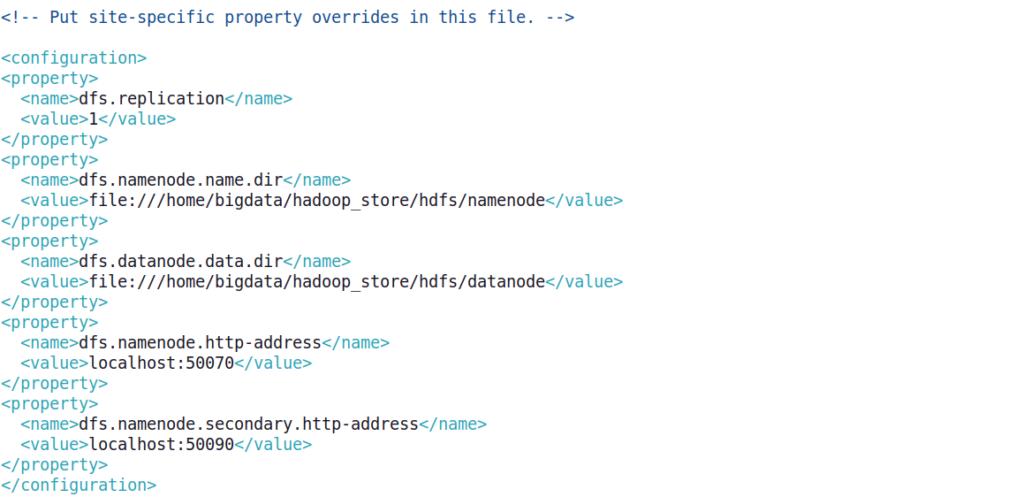

- /home/bigdata/hadoop/etc/hadoop/hdfs-site.xml

Se añade:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/bigdata/hadoop_store/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/bigdata/hadoop_store/hdfs/datanode</value>

</property>

</configuration>

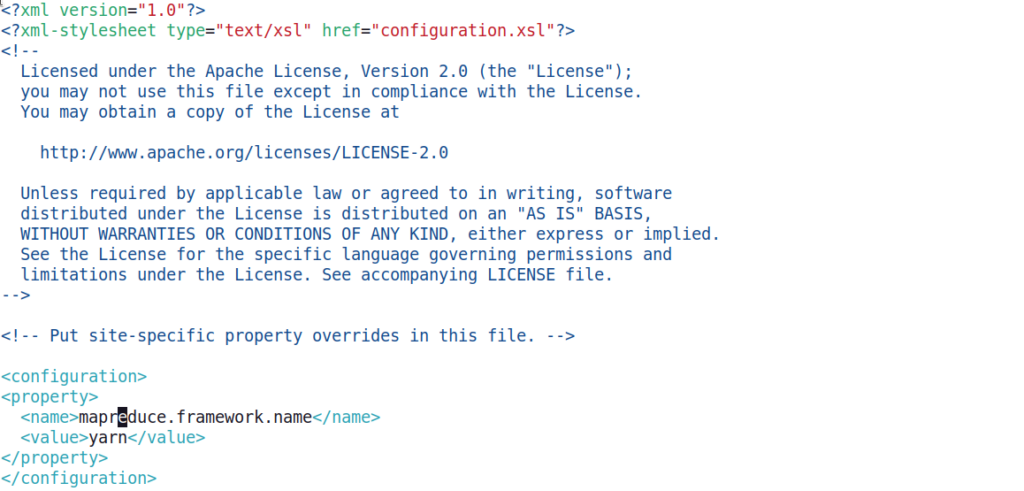

- /home/bigdata/hadoop/etc/hadoop/mapred-site.xml

Se añade:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

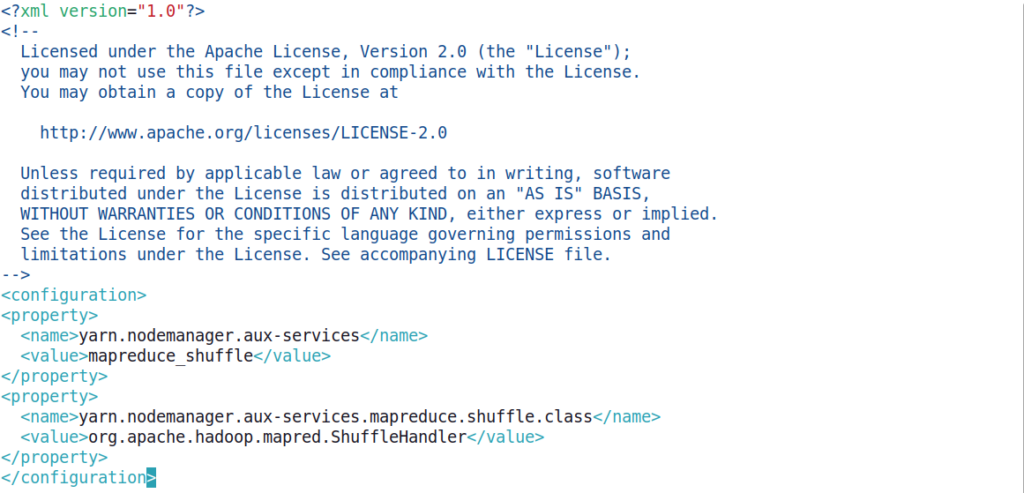

- /home/bigdata/hadoop/etc/hadoop/yarn-site.xml

Se añade:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

- Formateamos el sistema de ficheros (filesystem):

$ cd /home/bigdata/hadoop

$ bin/hdfs namenode -format- Creamos un servicio de arranque y parada:

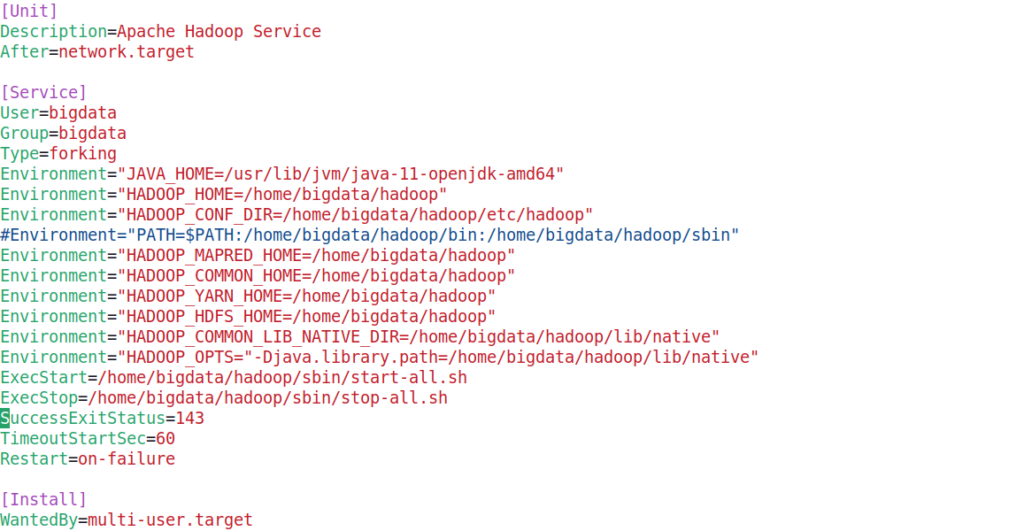

$ sudo vi /etc/systemd/system/hadoop.service

[Unit]

Description=Apache Hadoop Service

After=network.target

[Service]

User=bigdata

Group=bigdata

Type=forking

Environment="JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64"

Environment="HADOOP_HOME=/home/bigdata/hadoop"

Environment="HADOOP_CONF_DIR=/home/bigdata/hadoop/etc/hadoop"

Environment="HADOOP_MAPRED_HOME=/home/bigdata/hadoop"

Environment="HADOOP_COMMON_HOME=/home/bigdata/hadoop"

Environment="HADOOP_YARN_HOME=/home/bigdata/hadoop"

Environment="HADOOP_HDFS_HOME=/home/bigdata/hadoop"

Environment="HADOOP_COMMON_LIB_NATIVE_DIR=/home/bigdata/hadoop/lib/native"

Environment="HADOOP_OPTS="-Djava.library.path=/home/bigdata/hadoop/lib/native"

ExecStart=/home/bigdata/hadoop/sbin/start-all.sh

ExecStop=/home/bigdata/hadoop/sbin/stop-all.sh

SuccessExitStatus=143

TimeoutStartSec=60

Restart=on-failure

[Install]

WantedBy=multi-user.target

- Arranque de todos los servicios:

$ sudo systemctl start hadoop- Parada de todos los servicios:

$ sudo systemctl stop hadoop- Status de todos los servicios:

$ sudo systemctl stop hadoop- Lo habilitamos:

$ sudo systemctl enable hadoopTags: Hadoop

Category: Big Data